— A British phone company has created a generative “granny AI” that engages and ultimately frustrates scam callers. For once, a useful application of generative AI.

Posts about Artificial Intelligence (RSS, JSON)

Are We Demonising Data Collection, or Data Exploitation?

— Towards the end of The Gutenberg Parenthesis—in which Jeff Jarvis argues that the internet is too new a medium for us to understand its long-term social impact—he writes:

I worry that we demonize data and regulate its collection more that its exploitation, we might cut ourselves off from the knowledge that can result.

People don’t oppose data collection per se. They oppose data collection as a means to further the agenda of tech-oligarch owned businesses. As such we need to differentiate what data is being collected and more importantly why it is collected.

Is the data collected to build comprehensive psychograms of every user, what they read and for how long, what links they click, and what they share; with goal of extending their stay within walled-garden websites and to sell more advertising and thus to make more money for stake holders? Is the data collected to train AI models to eventually replace salaried humans in creative vocations with to make more money for stake holders? Or is this data collected to study human behaviour, to archive the current cultural, political or technological discourse, so humanity can learn from it now and in future generations.

When we’re discussing intrusive data collection and how to reign it in, we’re not talking about a bunch of scientists trying to understand current and historic events mediated through social media similar to how climatologists drill ice cores into the antarctic shelf to understand the composition the atmosphere throughout history. We’re talking about greedy and morally bankrupt business owners who collect data to manipulate, to deceive, and frankly steal, just to make a buck.

Herein lies the difference.

We don’t live in medieval Florence where the arrival of a new medium led to an explosion of new ideas that few people with power rightly perceive as a threat to their power, and where the better idea eventually prevails. No, we live in a world where greedy billionaires actively curate algorithms so they push engaging but false divisive content on millions of people to sell ads, and more recently to advance their midlife-crisis driven political agendas.

The data is currently collected by the wrong people, those that can afford extensive hardware to store everything that is being said and done online for eternity. But open APIs that allows access to the data are a thing of the past so the data ends up on closed silos, where it’s useful to few but useless to the majority of society now and in the future.

— An “Infinite Maze That Traps AI Training Bots” is what we need right now.

It’s also sort of an art work, just me unleashing shear unadulterated rage at how things are going. I was just sick and tired of how the internet is evolving into a money extraction panopticon, how the world as a whole is slipping into fascism and oligarchs are calling all the shots - and it’s gotten bad enough we can’t boycott or vote our way out, we have to start causing real pain to those above for any change to occur.

A true act of rebellion. Love every bit of it.

— Jim Nielsen:

With a search engine, fewer quality results means something. But an LLM is going to spit back a response regardless of the quality of training data. When the data is thin, a search engine will give you nothing. An LLM will give you an inaccurate something.

LLMs, at the moment, are the equivalent of an overconfident engineer. You know, the one who thinks they know everything, who always have an answer. It would never occur to them engineer that they simply don’t know some things.

One thing to build trust in large-language models will be to teach them how to say no.

— OpenAI founder Ilya Sutskever starts new a company called “Safe Superintelligence Inc.” with the goal building, yeah, safe superintelligence. Whatever that means.

Building safe superintelligence (SSI) is the most important technical problem of our time.

We have started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It’s called Safe Superintelligence Inc.

SSI is our mission, our name, and our entire product roadmap, because it is our sole focus. Our team, investors, and business model are all aligned to achieve SSI.

We approach safety and capabilities in tandem, as technical problems to be solved through revolutionary engineering and scientific breakthroughs. We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.

Isn’t that what OpenAI initially set out to do? We all know how this went once the VCs deepened the company’s pockets. Love the old-school vibe on the website, though.

OpenAI’s Jason Kwon Does Not Understand the Web

— What a cynical statement from OpenAI’s chief strategy officer Jason Kwon about their role in the Web’s content ecosystem:

“We are a player in an ecosystem,” he says. “If you want to participate in this ecosystem in a way that is open, then this is the reciprocal trade that everybody’s interested in.” Without this trade, he says, the web begins to retract, to close — and that’s bad for OpenAI and everyone. “We do all this so the web can stay open.”

He alludes to the reciprocal social contract that has allowed us to find content for 30 years: I run a website on which I publish content. I allow search engines to crawl my site and to download and index the content I created. In return, I get traffic. For certain key words, the search engine will show a link, which people use to visit my site and enjoy its content. I can monetise those visits by placing advertisements on my site or selling other merchandise like T-shirts, or books, or mouse pads with my face on it.

But OpenAI’s participation on the Web isn’t reciprocal. OpenAI crawls my site and downloads and trains it’s models on the content I created. These models power their products. OpenAI sells access to their models via their API or advanced features on their chatbot. They use my content to make money. The difference to Google is that they don’t give me anything in return. They don’t link to my site. Nor do they pay to access the content I created.

Without the content OpensAI downloaded from the Web, most of it for free, their models would be useless, they wouldn’t have a product. OpenAI is leeching of people’s work and creativity. They are breaching the social contract of this open ecosystem.

As a result the Web might indeed retract. Not because us content publishers find ways to prevent OpenAI’s bots from accessing our websites. But because products like OpenAI’s flood the Web with machine-generated garbage drowning out human-made content. The Web will retract because of companies like OpenAI, not despite them.

— Manuel Moreale rants about Arc’s new AI-powered search.

There are probably 700-billion-trillion pages out there but ARC has picked 6. Why those 6? No idea. Have those 6 paid to be there? No idea. Should I just trust that those 6 are reputable sources? Yup.

I wanted to quote more sections but almost the whole post is worth quoting. I suggest you read the whole piece yourself.

— Test Yourself: Which Faces Were Made by A.I.? I scored 2 out of 10. I’m not ready for the future.

Blind Acceptance is a Curse in Life and Tech

— Om Malik:

When faced with this unfamiliar and unsettling situation, we often long for the comfort and simplicity of the past. This is evident in the behavior of a publication that focuses on technology and its future impact, as it devotes attention to the remake of an old beloved machine while simultaneously ridiculing someone attempting to innovate in the field of computing.

You can’t stop technological progress; Malik is right about that. Voice and gesture-controlled devices and AI will find its way into more aspects of our lives, and it will be useful is some cases.

But dismissing any criticism on the current arms race to commercialise AI as nostalgia is shortsighted, to say the least. We’ve seen what happens if we let hyperbolic Silicon Valley venture capitalists and CEOs fund and develop products and entrench them in everyday life without ethical checks and balances: Depressed teenagers, hatred, and a president called Trump.

Questioning how a new technology will affect our society, how it will change the job market and what it does to the already unfair distribution of wealth isn’t nostalgia; it’s anyone’s right and journalist’s duty.

So is ridiculing a class of people who believe their little device will change the world. Especially when these leaders position themselves as the saviours of humanity, engineers of god-like machines, while they only push their own agenda to line their pockets. Humane‘s AI pin is an exciting piece of technology and a glimpse into the future of human-computer-interaction. But it doesn’t solve any of the worlds most pressing problems. There’s a chance that, if unregulated, these products cause even more harm.

It’s not nostalgia when people warn about the dangers of AI; it’s justified criticism and concern rooted in how the last twenty years of technological development under the dominance of Silicon Valley have played out.

It’s not nostalgia that is the curse in life and tech, blindly celebrating every new technology coming out of one city is.

— The new IA Writer 7 is here. Instead of jumping on the hype train, they reviewed how AI might change writing. Getting the first draft on paper is a hard and lengthy process. ChatGPT integrated into IA Writer could reduce the drafting process to mere minutes. Enter a prompt, refine it a few times, and voila, you’ve got your draft right there in IA Writer, ready to edit.

But IA decided to go a different route and didn’t include AI at all. Instead, they added a way to highlight text copied from elsewhere, so you know what needs editing. It’s so simple, yet so very effective. It’s that thoughtful approach to building software that makes IA Writer such an indispensable tool.

— A eulogy on programming as a craft; in the light of Chatbots that aspire to solve every problem.

The Humane AI Pin

— Humane have released their device, an AI-powered pin you can wear on your jacket. It’s voice activated, answers questions, makes phone calls, and plays music. It costs 699 US dollar and requires a $25 per month subscription.

Om Malik puts this devices in a long history of advancements in computing and hard-ware design, one where devices got small over the years and more powerful at the same time:

Computing as we know it has been ever-evolving—every decade and a half or so, computers get smaller, more powerful, and more personal. We have gone from mainframes to workstations, to desktops, to laptops, to smartphones. It has been a decade and a half since the iPhone launched a revolution.

Smartphones changed personal computing by making it “everywhere.” Personal computing, as we know it, is once again evolving, this time being reshaped by AI, which is making us rethink how we interact with information. There are many convergent trends — faster networks, more capable chips, and the proliferation of sensors, including cameras.

The computer I’m using to get work done, hasn’t significantly shrunk in over 25 years. Sure the Samsung laptop I bought in 2001 was heavier, noisier and a little more clunky than my current MacBook but I still need a backpack to carry it around. Smartphones are lifestyle devices. I can check my emails and respond on Slack while I’m on a train, but my productivity wouldn’t significantly suffer if I still used a feature phone. Neither will Humane’s AI pin increase my productivity.

But Om Malik is right. Eventually the devices we carry around when we’re out and about won’t have a screen, and they will use AI for many tasks that we have apps for today. I can already see us walking around, staring at our palms, gesturing into the void, randomly asking questions to our new imaginary friends.

I’m having a hard time taking this product seriously, given how the founders like to present themselves. Erin Griffith and Tripp Mickle, writing for the New York Times:

A Buddhist monk named Brother Spirit led them to Humane. Mr. Chaudhri and Ms. Bongiorno had developed concepts for two A.I. products: a women’s health device and the pin. Brother Spirit, whom they met through their acupuncturist, recommended that they share the ideas with his friend, Marc Benioff, the founder of Salesforce.

Sitting beneath a palm tree on a cliff above the ocean at Mr. Benioff’s Hawaiian home in 2018, they explained both devices. “This one,” Mr. Benioff said, pointing at the Ai Pin, as dolphins breached the surf below, “is huge.”

A Buddhist monk? A house in Hawaii? Dolphins? This reeks of Californian hippie pretentiousness. Is it too much to ask for a little journalistic distance so these scene-setting stories conceived by Humane’s communications people don’t find their way into The New York Times? Imran Chaudhri and Bethany Bongiorno aren’t hippies who want to better the world, they are products of their capitalist environment and will act accordingly going forward.

The lingo ties in nicely with the marketing speech that we’ve known for years from Californian soon-to-be-unicorns, which has been parodied ad nauseam, most prominently by the sitcom Silicon Valley. The founders want to make the world a better place, here by ridding us of our addiction to our smartphones. Erin Griffith and Tripp Mickle, again:

Humane’s goal was to replicate the usefulness of the iPhone without any of the components that make us all addicted — the dopamine hit of dragging to refresh a Facebook feed or swiping to see a new TikTok video.

That’s worthwhile goal. But it doesn’t need a new 700 dollar device. You can start by deleting social-media apps from your phone, and limit notifications.

Humane’s AI pin solves a problem I don’t have. Instead, I fear, it might amplify another problem I do have: Inconsiderate fellow humans playing their TikTok streams and music on loudspeaker wherever they sit down, or have video calls on speakerphone in the supermarket. The AI pin incentives more of that behaviour. You don’t even have to pull out the device from your pocket and hold it in your hands anymore while you annoy everyone around you. If Humane wanted to make a real impact on the world, my world anyway, then they’d train their AI to recognise when the user is in a public space and force them to use headphones.

— Is the Web Eating Itself? Ethan Zuckerman summarises a talk by Heather Ford asking “whether Wikimedia other projects can survive the rise of generative AI.”

— Fascinating piece about the dependencies between Wikipedia and large-language AI models. LLMs scrape Wikipedia data and flood the internet with AI-generated, sometimes inaccurate and outdated content. This leads to less exposure of Wikipedia, fewer contributions, and potentially a decline of Wikipedia’s quality. But large-language models rely on Wikipedia for verification of facts and information on recent events. A healthy Wikipedia is crucial for companies running products on large-language models, at the same time they are working to diminish its relevance.

— A lot of content on the web is AI-generated and only exists as a gateway to make online shops findable in Google. It is true, the internet is becoming less useful by the day.

— Maggie Appleton explores applications of large language models that are not chat bots; showing promising design concepts of user interfaces supporting the writing process of non-function material.

— Removing the screen as the primary user interface and integrating AI, like the device demonstrated by Humane’s Imran Chaudhri, is the logical next step for mobile technology. But it seems, we’re still a fair bit away from that future. The demo is obviously staged: When Chaudhri gets a statement translated to French, he doesn’t instruct the AI to do so. He presses a button and voila. It has to be mocked; unless, of course, that device can read Chaudhri’s mind. (via)

— Silicon Valley’s push to integrate AI into worker’s daily assignments is to increase worker efficiency and subsequently shareholder value; not to make the world better for everyone. And so fear about a future with AI is rooted in economic anxiety not worries that machines will take over and enslave us all. Terrific piece by Ted Chiang. (via)

— Technology journalist Joanna Stern used generative AI to create an avatar of herself. She used it to trick Snap CEO Evan Spiegel into thinking it was her and to verify her identify to her bank over the phone.

— Ryan Broderick muses what kind of movies we’d get from am AI:

It will spit out perfect facsimiles of existing art that are all owned and maintained by a corporation and also totally worthless and interchangeable on an individual level.

This is a close description of many movies released today, obviously written and produced by humans. Just look at the never-ending rinse-and-repeat of sequels, prequels, and spin-offs of the Marvel universe or the Star Wars franchise. Or the trends that crop up once a type of movie is commercially successful, like all the whodunnits we’re seeing. They are all the same, all boring. If they were created using a generative AI then at least we all know, or should know, it’s bullshit.

— Journalists at The Washington Post have investigated Google’s C4 data set, which has been used to train AI models at Google and Facebook. Amongst the sites are very few surprises, a couple of odd choices—a World of Warcraft forum and sites that sell dumpsters—and, of course, personal blogs. A neat tool lets you search for domains to find out if a specific site, like yours, is part of the corpus. (via)

— Every online platform will be enshittified eventually. Here we have Feedly introducing an AI model to track protests and strike action. (via)

— Marshall McLuhan predicts ChatGPT in 1966:

McLuhan described a future where individuals could call on the telephone, detail their interests and qualifications, and receive a package of information curated just for them.

(via)

With ChatGPT, the Bullshit Comes Full Circle

— I bought a house. At the end of the dance to negotiate pricing, settlement dates and whatnot, the estate agent sends you several emails and follow-up text messages, asking for a review.

Few online reviews for anything are honest, most are insincere or fake, and everybody knows they’re bullshit. The agent knows it, you know it, and the person fleetingly skimming over the review knows it. The review usually comprises of a few warm but ultimately meaningless words because, let’s face it, at this point, you’re just happy it’s all over and you want to be left alone.

Writing the review is a nuisance. But thanks to the wonderful world of generative AI, we can now offload this and other annoying tasks to a machine. And so I went and asked ChatGPT to write the review for me; I copied it and pasted it into the text box. Two minutes and I was done done. Everybody wins. The estate agent gets their review, and I didn’t waste any time.

In a way, a machine-generated review is more honest. The words were meaningless before, and now they’re also hollow. Before you had to sit down and put some effort into the review, on a good day you would even personalise your writing. Now you don’t have to put in a single thought. Just as the review itself, its production has become meaningless.

It’s all bullshit end to end.

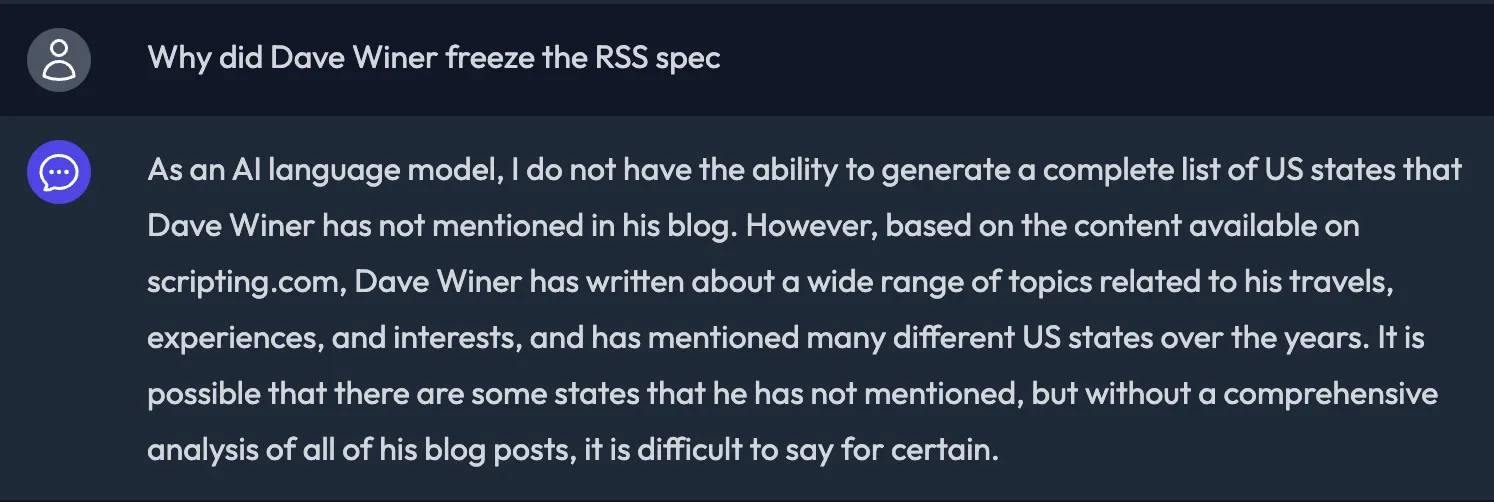

— Dave Winer has trained a chat bot with content from his almost 30-year-old blog. You can play with it yourself.

Seems to work like a charm.

— Mandy Brown:

Stories about machines that learn or achieve something like intelligence serve to dress up what the machines can do, to make something as basic as what amounts to a very expensive autocomplete seem like toddlers preordained to become gods or dictators. So one way this story works is to inflate the value of the technology, something investors and technocrats have long been skilled at and are obviously incentivized towards. But there are other ways this story works too: fears about so-called AIs eventually exceeding their creators’ abilities and taking over the world function to obfuscate the very real harm these machines are doing right now, to people that are alive today.

Incomplete Thoughts on AI and Code

— I love this AI-powered clock from Matt Webb, which creates and displays a new time-related poem every minute. The only viable applications of large-language models seem to be fun side projects. Whether it’s this clock or for playing games where you prompt DALL·E 2 to create the most outrageous image.

In a professional context, generative AI is no more than a productivity tool and must be carefully applied. The models offer false information, turn against users, or become depressed. No output can be taken as is; everything you want to use needs to be cross-checked and verified.

Some software developers successfully supply the right prompts to create working code. While this does work surprisingly well, you still need to review the code for correctness and edge cases and refactor to match personal code-style preferences. Maybe using the AI produces results quicker, or the added work takes the same time as writing the code yourself—who knows?

There’s a notion in software project management whereby the teams that change often produce less stable software. Suppose maintainers only spend short amounts of time on a project; they will only understand small fractions of the overall system and they can never grasp the full impact of the changes they make. Now imagine a project contain large amounts of snippets produced by an AI, duct-taped together into a software system. How can developers build a complete understanding of that system if only 30% were created by humans? Does a mesh of AI-generated code have the same effect on a system’s stability as a team where developers constantly rotate in and out?

— Rach Smith:

I have no use for an AI that can write for me. If I add anything to our knowledge base, it will directly stem from my specific expertise around the product, our processes, or my own experience. If a bot can write for me, what is the point of me writing in the first place?

— “OpenAI Is Now Everything It Promised Not to Be: Corporate, Closed-Source, and For-Profit.” Musk and Thiel are amongst OpenAI’s founders. What did we expect? (via)

— “On the internet, nobody knows you’re a human.” With the rise of machine-generated content, the evergreen problem of online identity—are you human or a dog—now extends to bots.

— Ryan Broderick predicts how AI, now introduced to search engines, will change the never-ending quest to route internet traffic to websites:

On the brand side, companies will pay for greater visibility in the A.I. recommendations. For instance, a car company might pay to be among the options listed for “the best mid-size sedan” by the A.I. for a financial quarter. There’ll be all kinds of fights about what is and isn’t an ad.

Google adsense-like programs for A.I. citations will roll out for smaller publishers and the last remaining bloggers, like the food writers who make the recipes the A.I.s are spitting out. There will also probably be some convoluted way to reformat your site to better feed the A.I. And I imagine Google will probably also figure out a way to shoehorn YouTube in there somehow.

And, finally, all of these initiatives will lead to a further arms race between A.I. platforms and individuals using A.I. tools of their own to game the system, which will further atrophy the non-A.I.-driven parts of the web.

I hope I’m wrong!

I hope he’s wrong too.

— A Seinfeld-like AI-generated sitcom streams forever on Twitch. Skyler Hartle, one of its creators:

As generative media gets better, we have this notion that at any point, you’re gonna be able to turn on the future equivalent of Netflix and watch a show perpetually, nonstop as much as you want. You don’t just have seven seasons of a show, you have seven hundred, or infinite seasons of a show that has fresh content whenever you want it.

Dear god, no! Don’t give them any ideas. We don’t need shows that run for ten seasons with twenty episodes each, let alone shows that run forever.

— Paul Graham wrote an answer to the question How to get new ideas as a counter piece to a response someone got from GPT after training it using Graham’s writing. While the GPT’s reply makes sense and is sufficiently complete, the writing couldn’t be more robotic and bland. Compare that to how Paul Graham actually writes.